The tutorial provides the details on the node.js streams module. The node.js streams module provides the capability of reading the data from the source and writing the data to the destination. Thus, node.js provides the readable and writable streaming process.

The node.js streams module can be accessed using the below given command:

const stream = require('stream');Node.js stream types:

The below given are the node.js stream types available for the usage:

| Stream Type | Stream Description |

| Readable | stream available for read operations – fs.createReadStream() |

| Writable | stream available for write operations – fs.createWriteStream() |

| Duplex | stream available for both read and write operations – net.socket |

| Transform | type of duplex stream which can transform or modify data – zlib.createDeflate() |

Node.js stream events

The below given are the events supported by the stream types:

| Stream Event | Stream Event Description |

| Data | event fires when data is available to read |

| End | event fires when no further data is available to read |

| Error | event fires when receives and error |

| Finish | event fires when data is flushed to the system |

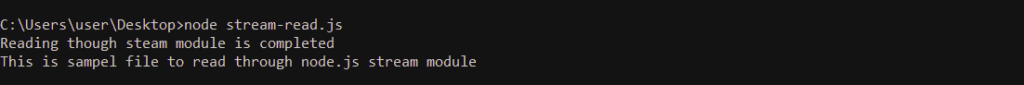

Reading data from node.js stream

var fs = require("fs");

var data = '';

// Define the readable stream object

var readerStream = fs.createReadStream('stream-readable-file.txt');

// Define encoding for the stream - UTF8

readerStream.setEncoding('UTF8');

// DEfine stream events

readerStream.on('data', function(chunk) {

data += chunk;

});

readerStream.on('end',function(){

console.log(data);

});

readerStream.on('error', function(err){

console.log(err.stack);

});

console.log("Reading though steam module is completed");

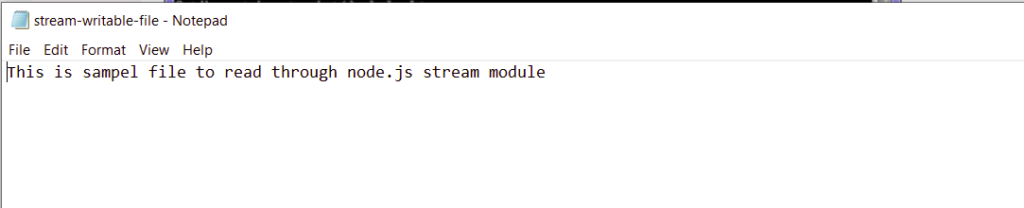

Writing data to stream

var fs = require("fs");

// custom message for writing

var data_to_write = 'This is an exmaple of writing the data to the file using writable stream ';

// DEfine the writable stream

var writerStream = fs.createWriteStream('stream-writable-file.txt');

//seeting the data with the encoding

writerStream.write(data_to_write,'UTF8');

writerStream.end();

writerStream.on('finish', function() {

console.log("Write completed.");

});

writerStream.on('error', function(err){

console.log(err.stack);

});

console.log("progam for writing the data through stream module is completed");

Node.js stream buffering

The node.js streaming process uses the internal buffer for storing the data . The below given are the methods to retrieve buffer data for readable and writable streams

| Stream Type | Stream Buffer Method |

| Readable Streams | readable.readableBuffer |

| Writable Streams | writable.writableBuffer |

The maximum streaming data data can be stored in buffer depends on the highWaterMark option passed into the stream’s constructor. HighWaterMark can stores the data into bytes or into objects.

The streaming data in readable streams is buffered when implementation calls the stream.push(chunk)

The streaming data in writable streams is buffered when implementation calls the writable.write(chunk)

The limit of buffering the data to avoid memory consumption or perfromance issue is maintained by stream.pipe() method in the stream API

Duplex and Transform streams are both readable and writable and maintains separate buffer to perform read and write operations

Node.js Piping Stream

The piping stream is the mechanism where the output of one stream becomes the input for the another stream.

var fs = require("fs");

var readerStream = fs.createReadStream('stream-readable-file.txt');

var writerStream = fs.createWriteStream('stream-writable-file.txt');

readerStream.pipe(writerStream);

console.log("Node.js piping stream program completed");

The content of the input file is written to the writable file

Node.js chaining stream

The node.js chaining stream is the mechanism where the chain is created with multiple streams operations by connecting output of one stream as the input of another stream.

var fs = require("fs");

var zlib = require('zlib');

fs.createReadStream('stream-readable-file.txt')

.pipe(zlib.createGzip())

.pipe(fs.createWriteStream('stream-readable-file.txt.gz'));

console.log("The input file is compressed successfully.");